共计 21450 个字符,预计需要花费 54 分钟才能阅读完成。

背景

在 Kubernetes 的生态系统中,Prometheus 已经成为监控的事实标准。然而在实际环境中,从头构建一个完整的监控系统可能显得过于复杂。这涉及到多个组件的协同工作,包括数据采集器(如各种 Exporter 或 Kubernetes 自带的 Endpoint)、监控工具(Prometheus)、告警系统(Alertmanager)、以及可视化平台(Grafana)。

此外,实现这些功能还需要定义和配置多种 Kubernetes 资源,如角色(Role)、配置映射(ConfigMap)、密钥(Secret)、服务(Service)、网络策略(NetworkPolicy)等。

kube-prometheus 提供了一套完整而集成的解决方案来应对这一挑战。它包括了所有必要的组件,能够更高效地部署和管理 Kubernetes 集群中的监控。

同时,它通过标准化的配置和社区支持帮助您快速实施并维护最佳实践,从而降低复杂性和工作负担。

并且它引入了 Prometheus Operator 模式,通过创建自定义资源(CRD)能够自动化地处理 Prometheus 的部署、管理和扩展,自动发现 Kubernetes 集群中运行的服务和应用。

这是官方给到的 prometheus 架构图:

参考链接:

www.cnblogs.com/hovin/p/18285696

www.cnblogs.com/layzer/articles/prometheus-operator-notes.html

developer.aliyun.com/article/1139137

实践

操作系统版本:Ubuntu 20.04

Kubernetes 版本:v1.28.4

Kubernetes 部署方式:kubeadm-ha-release-1.29 (基于kubeadm)

kube-prometheus版本:0.14

| 节点类型 | hostname | ip |

|---|---|---|

| control-plane | k8s-master-1 | 192.168.2.201 |

| control-plane | k8s-master-2 | 192.168.2.202 |

| control-plane | k8s-master-3 | 192.168.2.203 |

| worker | k8s-worker-1 | 192.168.2.204 |

| worker | k8s-worker-2 | 192.168.2.205 |

下载

从 github.com/prometheus-operator/kube-prometheus 下载与 K8S集群版本匹配的 releases ZIP 包,并解压缩。

mkdir /ops/kube -p && cd /ops/kube

wget https://github.com/prometheus-operator/kube-prometheus/archive/refs/tags/v0.14.0.zip

unzip v0.14.0.zip

查看 manifests 目录结构:

# tree manifests/

manifests/

├── alertmanager-alertmanager.yaml

├── alertmanager-networkPolicy.yaml.bak

├── alertmanager-podDisruptionBudget.yaml

├── alertmanager-prometheusRule.yaml

├── alertmanager-secret.yaml

├── alertmanager-serviceAccount.yaml

...

1 directory, 95 files

manifests/setup 下包含了 Kubernetes 自定义资源定义(CRD)和一些基础设施前置设置(NameSpace)。

manifests/ 下包含了部署监控相关组件的 Kubernetes 清单文件。

监控资源组件:

- Prometheus

- Alertmanager

- Grafana

- Exporter(如 Blackbox Exporter, Node Exporter)

- Kube State Metrics

- Prometheus Adapter

Kubernetes 资源定义:

- Deployment

- DaemonSet

- Service

- ServiceAccount

- ConfigMap

- Secret

- Role

- RoleBinding

- ClusterRole

- ClusterRoleBinding

- PodDisruptionBudget

- NetworkPolicy

- PrometheusRule

- ServiceMonitor

- PodMonitor

查看 NetworkPolicy,可以发现有限制只能通过 ingress 方式访问,如果按照 Github 官方部署教程,安装后使用 kube proxy 或改 nodeport 都不能从外部访问到监控组件。

以 grafana-networkPolicy.yaml 为例:

# cat grafana-networkPolicy.yaml.bak

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

labels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 11.2.0

name: grafana

namespace: monitoring

spec:

egress:

- {}

ingress:

- from:

- podSelector:

matchLabels:

app.kubernetes.io/name: prometheus

ports:

- port: 3000

protocol: TCP

podSelector:

matchLabels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

policyTypes:

- Egress

- Ingress

本文重点不在网络策略,在部署时会跳过它。实际生产环境请自行通过添加标签等方式解决外部流量路由。

简单部署

service 默认使用端口类型为 ClusterIP,为了便于从外部访问 Grafana、Prometheus、Alertmanger ,将其修改为 NodePort:

# vim manifests/grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 11.2.0

name: grafana

namespace: monitoring

spec:

type: NodePort #新增

ports:

- name: http

port: 3000

targetPort: http

nodePort: 30030 #新增

selector:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

# vim manifests/prometheus-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.54.1

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort #新增

ports:

- name: web

port: 9090

targetPort: web

nodePort: 30090 #新增

- name: reloader-web

port: 8080

targetPort: reloader-web

selector:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

sessionAffinity: ClientIP

# vim manifests/alertmanager-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.27.0

name: alertmanager-main

namespace: monitoring

spec:

type: NodePort #新增

ports:

- name: web

port: 9093

targetPort: web

nodePort: 30093 #新增

- name: reloader-web

port: 8080

targetPort: reloader-web

selector:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

sessionAffinity: ClientIP

部署:

# 创建前置资源

kubectl apply --server-side -f manifests/setup

# 验证前置资源是否就绪

kubectl wait \

--for condition=Established \

--all CustomResourceDefinition \

--namespace=monitoring

# 移除网络策略

cd manifests && for f in *networkPolicy.yaml; do mv "$f" "${f%.yaml}.yaml.bak"; done && cd ..

# 安装 kube-prometheus

kubectl apply -f manifests/

# 查看svc

# kubectl -n monitoring get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-main NodePort 10.244.89.91 <none> 9093:30093/TCP,8080:31905/TCP 60s

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 52s

blackbox-exporter ClusterIP 10.244.78.10 <none> 9115/TCP,19115/TCP 60s

grafana NodePort 10.244.90.211 <none> 3000:30030/TCP 59s

kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 59s

node-exporter ClusterIP None <none> 9100/TCP 59s

prometheus-adapter ClusterIP 10.244.124.90 <none> 443/TCP 58s

prometheus-k8s NodePort 10.244.89.101 <none> 9090:30090/TCP,8080:31397/TCP 58s

prometheus-operated ClusterIP None <none> 9090/TCP 51s

prometheus-operator ClusterIP None <none> 8443/TCP 58s

# 查看 pod 状态

# kubectl get pod -o wide -n monitoring

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

alertmanager-main-0 2/2 Running 0 55s 10.244.34.160 k8s-worker-2 <none> <none>

alertmanager-main-1 2/2 Running 0 55s 10.244.46.181 k8s-worker-1 <none> <none>

alertmanager-main-2 2/2 Running 0 55s 10.244.34.161 k8s-worker-2 <none> <none>

blackbox-exporter-75c7985cb8-v4bjs 3/3 Running 0 64s 10.244.46.176 k8s-worker-1 <none> <none>

grafana-664dd67585-j595v 1/1 Running 0 63s 10.244.46.177 k8s-worker-1 <none> <none>

kube-state-metrics-66589bb466-pvdwv 3/3 Running 0 63s 10.244.46.178 k8s-worker-1 <none> <none>

node-exporter-gfnkk 2/2 Running 0 63s 192.168.2.204 k8s-worker-1 <none> <none>

node-exporter-gmkwb 2/2 Running 0 63s 192.168.2.203 k8s-master-3 <none> <none>

node-exporter-jc2n2 2/2 Running 0 63s 192.168.2.205 k8s-worker-2 <none> <none>

node-exporter-ktm8k 2/2 Running 0 63s 192.168.2.201 k8s-master-1 <none> <none>

node-exporter-wmnw7 2/2 Running 0 63s 192.168.2.202 k8s-master-2 <none> <none>

prometheus-adapter-77f8587965-dv7h4 1/1 Running 0 62s 10.244.34.159 k8s-worker-2 <none> <none>

prometheus-adapter-77f8587965-kb629 1/1 Running 0 62s 10.244.46.179 k8s-worker-1 <none> <none>

prometheus-k8s-0 2/2 Running 0 55s 10.244.46.182 k8s-worker-1 <none> <none>

prometheus-k8s-1 2/2 Running 0 55s 10.244.34.162 k8s-worker-2 <none> <none>

prometheus-operator-6f9479b5f5-vchn9 2/2 Running 0 62s 10.244.46.180 k8s-worker-1 <none> <none>

删除:

kubectl delete --ignore-not-found=true -f manifests/ -f manifests/setup

可以从浏览器访问 IP:PORT 验证服务状态:

grafana:http://192.168.2.201:30030

prometheus:http://192.168.2.201:30090/

alertmanger:http://192.168.2.201:30093/

配置修改

数据持久化

Prometheus

默认配置的存储类型是 EmptyDir,且 Prometheus 中 retention 配置为 1D。并不适合生产环境使用。

可以将其改为 HostPath 或 NFS 以实现数据持久化。

存储驱动方式有两类:

- in-tree

- 存储驱动代码直接内置在 Kubernetes 核心代码中

- 例如:早期的 NFS、iSCSI、AWS EBS 等存储驱动

- CSI

- 存储驱动作为独立的容器运行

- 可以独立于 Kubernetes 版本更新

- 支持第三方存储供应商开发自己的驱动

Kubernetes 正在逐步废弃 in-tree 驱动,CSI 是未来的标准。

而 PVC 可以动态供应(自动创建)或者静态供应(手动创建)。

对于存储方式选择不做过多展开,下面以 CSI + 动态供应 为例:

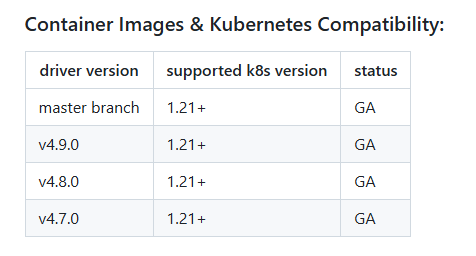

Github 官方链接:NFS CSI driver for Kubernetes

选择合适的驱动版本下载部署:

git clone https://github.com/kubernetes-csi/csi-driver-nfs.git && cd csi-driver-nfs

# ./deploy/install-driver.sh v4.9.0 local

use local deploy

Installing NFS CSI driver, version: v4.9.0 ...

serviceaccount/csi-nfs-controller-sa created

serviceaccount/csi-nfs-node-sa created

clusterrole.rbac.authorization.k8s.io/nfs-external-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/nfs-csi-provisioner-binding created

csidriver.storage.k8s.io/nfs.csi.k8s.io created

deployment.apps/csi-nfs-controller created

daemonset.apps/csi-nfs-node created

NFS CSI driver installed successfully.

当执行安装命令时,会部署必要的 RBAC、控制器组件、节点组件和安装 CSI 驱动信息。

验证:

# kubectl get daemonsets.apps -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

calico-node 5 5 5 5 5 kubernetes.io/os=linux 7d18h

calicoctl 5 5 5 5 5 <none> 7d18h

csi-nfs-node 5 5 5 5 5 kubernetes.io/os=linux 7h9m

kube-proxy 5 5 5 5 5 kubernetes.io/os=linux 7d18h

# kubectl get pods -n kube-system -o wide | grep csi-nfs

csi-nfs-controller-f986d5999-z9tfj 4/4 Running 1 (35s ago) 78s 192.168.2.204 k8s-worker-1 <none> <none>

csi-nfs-node-27vhn 3/3 Running 0 78s 192.168.2.201 k8s-master-1 <none> <none>

csi-nfs-node-7qww9 3/3 Running 0 78s 192.168.2.203 k8s-master-3 <none> <none>

csi-nfs-node-85qwn 3/3 Running 0 78s 192.168.2.205 k8s-worker-2 <none> <none>

csi-nfs-node-dfxbb 3/3 Running 0 78s 192.168.2.204 k8s-worker-1 <none> <none>

csi-nfs-node-sblh7 3/3 Running 1 (30s ago) 78s 192.168.2.202 k8s-master-2 <none> <none>

每台node都安装 NFS客户端:

# 安装

apt install nfs-common

# 验证

dpkg -l | grep nfs-common

如果没有现成的 NFS服务端,自行安装方式为:

# 安装

apt-get install nfs-server

# 修改配置文件

/disk-st4000vx015-4t-no1/k8s/volume/ 192.168.2.0/24(rw,sync,no_root_squash)

# 重载服务

systemctl reload nfs-server

由于 prometheus-prometheus.yaml 中定义了运行用户 UID:1000,GID:2000:

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

CSI NFS 驱动不支持完整的权限定制(owner、group、permissions 的组合)

所以要么在 sc 中配置目录权限为 0777,要么在启动 prometheus ,pvc被创建后,手动修改目录的属主或属组。

创建存储类:

# vim prometheus-data-db-sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: prometheus-data-db

provisioner: nfs.csi.k8s.io

parameters:

server: 192.168.2.11

share: /disk-st4000vx015-4t-no1/k8s/volume

mountPermissions: "0777" # 不配置则prometheus无权限写入

reclaimPolicy: Delete

volumeBindingMode: Immediate

mountOptions:

- nfsvers=4.1

kubectl apply -f prometheus-data-db-sc.yaml

修改 Prometheus 配置文件:

# 追加配置

# vim prometheus-prometheus.yaml

retention: 30d #数据保存天数

storage: #存储配置

volumeClaimTemplate:

spec:

storageClassName: prometheus-data-db

resources:

requests:

storage: 200Gi

应用配置:

kubectl apply -f prometheus-prometheus.yaml

Grafana

默认配置的存储类型是 EmptyDir,持久化后用于长期保存用户组织、仪表盘、Grafana配置、插件等数据。

持久化的方式与 Prometheus 略有不同。

Grafana 使用 Deployment 方式部署。

而 Prometheus 是由 Prometheus Operator 自动生成,Prometheus CRD 会被 Prometheus Operator 转换为 StatefulSet。

# cat prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

...

# cat grafana-service.yaml

apiVersion: v1

kind: Service

...

# kubectl get deployment -n monitoring|grep grafana

grafana 1/1 1 1 15h

# kubectl get statefulset -n monitoring|grep prometheus

prometheus-k8s 2/2 12h

- StatefulSet 可以使用

volumeClaimTemplate 自动为每个 Pod 创建 PVC - Deployment 不支持

volumeClaimTemplate,需要手动创建 PVC,然后在 Deployment 中引用

创建存储类:

# vim grafana-sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: grafana-sc

provisioner: nfs.csi.k8s.io

parameters:

server: 192.168.2.11

share: /disk-st4000vx015-4t-no1/k8s/volume

mountPermissions: "0777"

reclaimPolicy: Delete

volumeBindingMode: Immediate

mountOptions:

- nfsvers=4.1

# 创建

kubectl apply -f grafana-sc.yaml

创建PVC:

# vim grafana-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-pvc

namespace: monitoring

spec:

storageClassName: grafana-sc

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Gi

# 创建

kubectl apply -f grafana-pvc.yaml

修改配置文件:

# grafana-deployment.yaml

...

volumes:

# 注释原有存储类型配置

# - emptyDir: {}

# name: grafana-storage

# PVC方式

- name: grafana-storage

persistentVolumeClaim:

claimName: grafana-pvc

应用配置:

kubectl apply -f grafana-deployment.yaml

监控分析

Prometheus Operator 是什么

参考链接:yunlzheng.gitbook.io/prometheus-book/part-iii-prometheus-shi-zha…

在非云原生环境下,运维人员往往需要人工配置和管理prometheus, job 和 target 等监控配置也依赖人工维护。

但在云原生环境下,资源和服务往往是动态扩缩实时变化的。

Prometheus Operator 通过监听 CRD (自定义资源)的变化,完成 Prometheus Server 自身和配置的自动化管理工作,降低传统方式管理 prometheus 的复杂度。

Operator 是 Kubernetes 的一种自定义资源的控制器。在集群中我们使用Deployment、DamenSet,StatefulSet来管理应用Workload,而 Prometheus Operator 则会管理控制 prometheus 相关资源。

CRD 对象

# kubectl get crd | grep monitoring

alertmanagerconfigs.monitoring.coreos.com 2024-12-04T10:39:46Z

alertmanagers.monitoring.coreos.com 2024-12-04T10:39:46Z

podmonitors.monitoring.coreos.com 2024-12-04T10:39:46Z

probes.monitoring.coreos.com 2024-12-04T10:39:46Z

prometheusagents.monitoring.coreos.com 2024-12-04T10:39:47Z

prometheuses.monitoring.coreos.com 2024-12-04T10:39:47Z

prometheusrules.monitoring.coreos.com 2024-12-04T10:39:47Z

scrapeconfigs.monitoring.coreos.com 2024-12-04T10:39:47Z

servicemonitors.monitoring.coreos.com 2024-12-04T10:39:48Z

thanosrulers.monitoring.coreos.com 2024-12-04T10:39:48Z

0.14 版本的 kube-prometheus 会提供上述自定义资源对象,实际上使用最多的是 ServiceMonitor,下文着重介绍。

对于 alertmanagers、thanosrulers、prometheusrules 等,不是本文的重点,我们实际生产环境用有其他告警设施。

ServiceMonitor 匹配规则

尽管创建了这么多类型的CRD,最常用的是 servicemonitor,因为我们最常用的部署类型也是 service。

查看 prometheus 配置中的 job,全都是 servicemonitor:

# kubectl exec -n monitoring prometheus-k8s-0 -c prometheus -- cat /etc/prometheus/config_out/prometheus.env.yaml|grep " job_name"

- job_name: serviceMonitor/monitoring/alertmanager-main/0

- job_name: serviceMonitor/monitoring/alertmanager-main/1

- job_name: serviceMonitor/monitoring/blackbox-exporter/0

- job_name: serviceMonitor/monitoring/coredns/0

- job_name: serviceMonitor/monitoring/grafana/0

- job_name: serviceMonitor/monitoring/kube-apiserver/0

- job_name: serviceMonitor/monitoring/kube-apiserver/1

- job_name: serviceMonitor/monitoring/kube-controller-manager/0

- job_name: serviceMonitor/monitoring/kube-controller-manager/1

- job_name: serviceMonitor/monitoring/kube-scheduler/0

- job_name: serviceMonitor/monitoring/kube-scheduler/1

- job_name: serviceMonitor/monitoring/kube-state-metrics/0

- job_name: serviceMonitor/monitoring/kube-state-metrics/1

- job_name: serviceMonitor/monitoring/kubelet/0

- job_name: serviceMonitor/monitoring/kubelet/1

- job_name: serviceMonitor/monitoring/kubelet/2

- job_name: serviceMonitor/monitoring/kubelet/3

- job_name: serviceMonitor/monitoring/node-exporter/0

- job_name: serviceMonitor/monitoring/prometheus-adapter/0

- job_name: serviceMonitor/monitoring/prometheus-k8s/0

- job_name: serviceMonitor/monitoring/prometheus-k8s/1

- job_name: serviceMonitor/monitoring/prometheus-operator/0

实际上创建的 servicemonitor 类型更多:

# kubectl get servicemonitors.monitoring.coreos.com -n monitoring

NAME AGE

alertmanager-main 28h

blackbox-exporter 28h

coredns 28h

grafana 28h

kube-apiserver 28h

kube-controller-manager 28h

kube-scheduler 28h

kube-state-metrics 28h

kubelet 28h

node-exporter 28h

prometheus-adapter 28h

prometheus-k8s 28h

prometheus-operator 28h

以 coredns 匹配规则为例

coredns servicemonitor 定义:

# kubectl get servicemonitors.monitoring.coreos.com -n monitoring coredns -o yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"monitoring.coreos.com/v1","kind":"ServiceMonitor","metadata":{"annotations":{},"labels":{"app.kubernetes.io/name":"coredns","app.kubernetes.io/part-of":"kube-prometheus"},"name":"coredns","namespace":"monitoring"},"spec":{"endpoints":[{"bearerTokenFile":"/var/run/secrets/kubernetes.io/serviceaccount/token","interval":"15s","metricRelabelings":[{"action":"drop","regex":"coredns_cache_misses_total","sourceLabels":["__name__"]}],"port":"metrics"}],"jobLabel":"app.kubernetes.io/name","namespaceSelector":{"matchNames":["kube-system"]},"selector":{"matchLabels":{"k8s-app":"kube-dns"}}}}

creationTimestamp: "2024-12-04T10:40:24Z"

generation: 1

labels:

app.kubernetes.io/name: coredns

app.kubernetes.io/part-of: kube-prometheus

name: coredns

namespace: monitoring

resourceVersion: "1536241"

uid: 2a218f4d-47f9-4e46-a9a9-417a823608c4

spec:

endpoints:

- bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

interval: 15s

metricRelabelings:

- action: drop

regex: coredns_cache_misses_total

sourceLabels:

- __name__

port: metrics # 指定要查找名为 "metrics" 的端口

jobLabel: app.kubernetes.io/name

namespaceSelector:

matchNames:

- kube-system # 匹配ns

selector:

matchLabels:

k8s-app: kube-dns # 匹配应用

再看看 coredns(kube-dns默认使用coredns)service 定义:

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

creationTimestamp: "2024-11-26T16:02:05Z"

labels:

k8s-app: kube-dns # 对应 servicemonitor 中的 selector --> matchLabels

kubernetes.io/cluster-service: "true"

kubernetes.io/name: CoreDNS

name: kube-dns

namespace: kube-system # 对应 servicemonitor 中的 namespaceSelector --> matchNames

resourceVersion: "265"

uid: a02f0682-43f2-4fd8-8ad2-7ca9792b9faf

spec:

clusterIP: 10.244.64.10

clusterIPs:

- 10.244.64.10

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: dns

port: 53

protocol: UDP

targetPort: 53

- name: dns-tcp

port: 53

protocol: TCP

targetPort: 53

- name: metrics # 对应 servicemonitor 中的 endpoint --> port

port: 9153

protocol: TCP

targetPort: 9153

selector:

k8s-app: kube-dns

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

最后看看 prometheus CRD 定义:

# kubectl get prometheus -n monitoring k8s -o yaml

...

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

...

如上两个 yaml 中添加注释的行,总结一下匹配规则:

- ServiceMonitor 的 namespaceSelector –> matchNames 匹配 k8s service 中 metadata –> namespace

- ServiceMonitor 的 selector –> matchLabels 匹配 k8s service 中 labels –> k8s-app

- ServiceMonitor 的 endpoints –> port 匹配 k8s service 中 ports –> name

- Prometheus CR 通过

serviceMonitorNamespaceSelector 确定要在哪些命名空间中查找 ServiceMonitor - Prometheus CR 通过

serviceMonitorSelector 匹配这些命名空间中的 ServiceMonitor 中 metadata –> labels

后两条,由于默认的值为 空,即代表 Prometheus CR 会匹配所有的 Namespace 中 ServiceMonitor 的所有 laebel。

常用指标

下述指标均以 ServiceMonitor 收集

指标类别:

- 核心组件指标

产出源:API Server, etcd, CoreDNS自身暴露的metrics接口

-

节点相关指标

产出源:node-exporter (以DaemonSet方式运行在每个节点)

-

容器和Pod相关指标

产出源:kubelet内置的cAdvisor

-

Kubernetes资源对象指标

产出源:kube-state-metrics

-

监控组件自身指标

产出源:Prometheus和Alertmanager自身的/metrics接口

-

网络相关指标

产出源:

kube-state-metrics (Service相关)

ingress-controller (如果部署了的话)

-

存储相关指标

产出源:kubelet的/metrics端点

-

控制器管理器指标

kube-controller-manager的metrics接口

Prometheus Operator 会自动的创建大部分 ServiceMonitor,但是对于 etcd、ControllerManager 和 Scheduler,需要进行一些额外配置才可成功收集。

kube-controller-manager 指标收集

上文提到了,ServiceMonitor 的 selector –> matchLabels 匹配 k8s service 中 labels –> k8s-app。

但实际上,现在并没有 kube-controller-manager 的service。

kube-controller-manager 以静态 pod 的方式由 master 节点的 kubelet 直接管理,配置文件为:/etc/kubernetes/manifests/kube-controller-manager.yaml

# kubectl get servicemonitors.monitoring.coreos.com -n monitoring kube-controller-manager -o yaml

...

selector:

matchLabels:

app.kubernetes.io/name: kube-controller-manager

# kubectl -n kube-system get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

calico-typha ClusterIP 10.244.90.131 <none> 5473/TCP 10d

kube-dns ClusterIP 10.244.64.10 <none> 53/UDP,53/TCP,9153/TCP 10d

kubelet ClusterIP None <none> 10250/TCP,10255/TCP,4194/TCP 10d

metrics-server ClusterIP 10.244.127.54 <none> 443/TCP 10d

# kubectl get pods -n kube-system kube-controller-manager-k8s-master-

kube-controller-manager-k8s-master-1 kube-controller-manager-k8s-master-2 kube-controller-manager-k8s-master-3

这里我们需要手动创建service,将监控端口对集群内暴露:

# cat prometheus-kube-controller-manager-service.yaml

apiVersion: v1

kind: Service

metadata:

namespace: kube-system # 对应 servicemonitor 中的 namespaceSelector --> matchNames

name: kube-controller-manager

labels:

app.kubernetes.io/name: kube-controller-manager # 对应servicemonitor 中的 selector --> matchLabels

spec:

selector:

component: kube-controller-manager # 对应 kube-controller-manager pod标签

ports:

- name: https-metrics # 对应 servicemonitor 中的 endpoint --> port

port: 10257 # 对应监控指标端口

targetPort: 10257 # 对应监控指标端口

# kubectl apply -f prometheus-kube-controller-manager-service.yaml

# kubectl get svc -n kube-system |grep controller

kube-controller-manager ClusterIP 10.244.92.57 <none> 10257/TCP 5m10s

同时,三台 master 上需要修改配置文件,将监听地址由 127.0.0.1 改为 0.0.0.0 。

kubelet 会自动 watch 文件的变化,并应用生成新的pod。

# vim /etc/kubernetes/manifests/kube-controller-manager.yaml

...

spec:

containers:

- command:

...

- --bind-address=0.0.0.0 # 修改此行

...

# 此时只修改了第一台 master

# kubectl get pod -o wide -A|grep kube-controller-manager

kube-system kube-controller-manager-k8s-master-1 1/1 Running 0 41s 192.168.2.201 k8s-master-1 <none> <none>

kube-system kube-controller-manager-k8s-master-2 1/1 Running 2 (9d ago) 10d 192.168.2.202 k8s-master-2 <none> <none>

kube-system kube-controller-manager-k8s-master-3 1/1 Running 2 (9d ago) 10d 192.168.2.203 k8s-master-3 <none> <none>

访问 prometheus 进行验证:

kube-scheduler 指标收集

与 kube-controller-manager 类似,手动创建service:

# vim prometheus-kube-scheduler-service.yaml

apiVersion: v1

kind: Service

metadata:

namespace: kube-system # 对应 servicemonitor 中的 namespaceSelector --> matchNames

name: kube-scheduler

labels:

app.kubernetes.io/name: kube-scheduler # 对应servicemonitor 中的 selector --> matchLabels

spec:

selector:

component: kube-scheduler # 对应 kube-scheduler pod 的标签

ports:

- name: https-metrics # 对应 servicemonitor 中的 endpoint --> port

port: 10259 # 对应监控指标端口

targetPort: 10259 # 对应监控指标端口

protocol: TCP

# kubectl apply -f prometheus-kube-scheduler-service.yaml

# kubectl get svc -n kube-system |grep sche

kube-scheduler ClusterIP 10.244.67.0 <none> 10259/TCP 28s

修改所有 master 节点配置文件的监听端口:

# vim /etc/kubernetes/manifests/kube-scheduler.yaml

...

spec:

containers:

- command:

- --bind-address=0.0.0.0

...

访问 prometheus 验证:

etcd 指标收集

etcd 也是以静态 pod 的方式由 master 节点的 kubelet 直接管理,配置文件为:/etc/kubernetes/manifests/etcd-external.yaml。

kube-prometheus 并没有创建 etcd 相关的 servicemonitor。

对比 kube-scheduler 和 kube-controller-manager,还需要引入 etcd 证书和手动创建 servicemonitor。

创建 secret:

kubectl create secret generic etcd-ssl --from-file=/etc/kubernetes/pki/etcd/ca.crt --from-file=/etc/kubernetes/pki/etcd/server.crt --from-file=/etc/kubernetes/pki/etcd/server.key -n monitoring

修改 prometheus 配置,引用证书:

# vim prometheus-prometheus.yaml

...

replicas: 2 #找到此处,添加以下两行内容

secrets:

- etcd-ssl

...

# kubectl apply -f prometheus-prometheus.yaml

创建 servicemonitor:

# vim servicemonitor-etcd.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app.kubernetes.io/name: etcd

app.kubernetes.io/part-of: kube-prometheus

name: etcd

namespace: monitoring

spec:

endpoints:

- bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

interval: 30s

port: https-metrics

scheme: https

tlsConfig:

#证书相关路径为prometheus pod内的路径

caFile: /etc/prometheus/secrets/etcd-ssl/ca.crt

certFile: /etc/prometheus/secrets/etcd-ssl/server.crt

keyFile: /etc/prometheus/secrets/etcd-ssl/server.key

insecureSkipVerify: true

jobLabel: app.kubernetes.io/name

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

app.kubernetes.io/name: etcd

# kubectl apply -f servicemonitor-etcd.yaml

创建 service:

# vim prometheus-etcd-service.yaml

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: etcd

labels:

app.kubernetes.io/name: etcd

spec:

selector:

component: etcd

ports:

- name: https-metrics

port: 2379

targetPort: 2379

protocol: TCP

# kubectl apply -f prometheus-etcd-service.yaml

访问 prometheus 验证:

总结

由 Prometheus Operator 来管理 prometheus 实例本身及其配置,可以极大降低运维人员的心智负担。

一般情况下只需要管理好 serviceMonitor 对象即可实现监控。但需要注意好匹配规则。

本文属于专题:Prometheus 监控

- Prometheus 统计月度流量

- 使用 Redis Exporter 监控 Redis

- Grafana 备份、迁移与升级

- 云原生监控 Kube-Prometheus

- Prometheus 集成 Nginx 监控

- Prometheus 查询指定时间范围内的峰值或均值

- 云监控接入本地Prometheus

- 使用 MySQL Exporter 监控MySQL

- 个人微信接收夜莺告警消息

- PushGateway 报错:too many open files

- Blackbox 网络监控

- node_exporter 添加自定义指标

- Python 实现资源水位巡检

- Prometheus 替代方案:VictoriaMetrics

- 使用 node_exporter 实现路由器监控

引用链接

- www.cnblogs.com/hovin/p/18285696

- www.cnblogs.com/layzer/articles/prometheus-operator-notes.html

- developer.aliyun.com/article/1139137

- kubeadm-ha-release-1.29

- github.com/prometheus-operator/kube-prometheus

- NFS CSI driver for Kubernetes

- yunlzheng.gitbook.io/prometheus-book/part-iii-prometheus-shi-zha...